Lost and Found: An Archimedean Spiral Approach for Lost Object Recovery

Published:

It has been a while since I last posted. I have been busy with work and other personal projects. I am excited to share with you a new project that I have been working on. In this blog post, I will discuss the art and science of searching for lost objects. I will explore how Archimedean spiral transforms this everyday problem into a solvable equation. Using Python and mathematical modeling, I will uncover patterns that could revolutionize how I search for lost objects.

Problem Background

We all have experienced that moment of panic when something valuable is lost. Whether it’s a ring in a park, keys in a field, or even in critical search and rescue operations, the fundamental question remains: What’s the most efficient way to search?

Scenario

Imagine losing a class ring in a $100\times100$ foot park as darkness falls. You have:

- A pen light flashlight

- $2$ hours of search time

- Walking speed of $4$ mph

- Various obstacles to navigate around

This seemingly simple scenario actually presents a complex optimization problem involving multiple variables:

class SearchParameters:

def __init__(self):

self.map_size = 100 # feet

self.light_radius = 2 # feet

self.walking_speed = 4 * 5280 / 60 # feet/minute

self.search_time = 120 # minutes

self.obstacles = [(30, 30), (50, 50), (70, 20), (20, 70)]

The Physics of Searching

Before diving into patterns, let’s understand the physics involved:

- Light Effectiveness: The effectiveness of your flashlight follows an exponential decay:

This means:

- At source: $100\%$ effectiveness

- At $2$ feet: $\approx 37\%$ effectiveness

- At $4$ feet: $\approx 14\%$ effectiveness

def light_effectiveness(self, distance):

return np.exp(-distance / self.light_radius)

- Coverage Area: Each step covers an area defined by:

Where:

- $R$ is the effective light radius

- $d$ is the distance walked

Analysis

Our analysis revealed that the spiral search pattern is the most efficient way to search for lost objects. The spiral pattern maximizes coverage area while minimizing overlap. This pattern is particularly effective when the search area is large and the search time is limited.

To understand why the spiral pattern is so effective, one may note the following:

- Mathematical Foundation: The Archimedean spiral is defined by:

- $r(\theta) = a\theta + r_0$

- $x(\theta) = r(\theta) \cos(\theta) + x_c$

- $y(\theta) = r(\theta) \sin(\theta) + y_c$ where:

- $a = 2$ (spiral parameter)

- $r_0 = 1$ (initial radius)

- $\theta \in [0, 15\pi]$ (angle range)

- $(x_c, y_c) = (\frac{\text{map}\text{size}}{2}, \frac{\text{map}\text{size}}{2})$ (center of the spiral)

def generate_spiral_path(self):

theta = np.linspace(0, 15 * np.pi, 1000)

r = 2 * theta + 1

x = r * np.cos(theta) + self.map_size / 2

y = r * np.sin(theta) + self.map_size / 2

return x, y

- Coverage Optimization: The spiral pattern ensures the following:

- No area is left unsearched

- Minimal overlap between search paths

- Efficient time utilization

- Natural progression from center to peripheral areas

For each point $(x, y)$ in the map, I calculate the light intensity $E(d)$ from the light source. The coverage is defined as the sum of the light intensity over all points in the map: \(C(x,y) = \max_{p \in P} \{E(d_{p,(x,y)})\}\) where:

- $P$ is the set of all search path points

- $d_{p,(x,y)}$ is the distance from path point $p$ to $(x,y)$

- $E(d_{p,(x,y)})$ is the light intensity at distance $d_{p,(x,y)}$

I may then also define the search efficiency as the ratio of covered area to total search area:

- Search Efficiency: The search efficiency is calculated as: \(\textrm{Efficiency} = \frac{\sum_{x,y} I(C(x,y)> \tau)}{\sum_{x,y} I(T(x,y)<\theta)} \times 100%\) where:

- $I(\cdot)$ is the indicator function

- $\tau = 0.3$ is the coverage threshold

- $T(x,y)$ is the terrain difficulty at $(x,y)$

- $\theta = 0.8$ is the terrain difficulty threshold

- Distance and Time Calculation:

Total distance: \(D = \sum_{i=1}^{n-1} \sqrt{(x_{i+1} - x_{i})^2 + (y_{i+1} - y_{i})^2}\)

Search time: \(T = \frac{D}{v}\)

where:

- $v$ is the walking speed (4 mph covert to feet per minute)

- $D$ is the total distance in feet

- Obstacle Avoidance:

A point is invalid if:

\[\min_{o \in O} \{\sqrt{(x-x_o)^2+(y-y_o)^2}\} \geq d_{\text{min}}\]where:

- $O$ is the set of all obstacles

- $d_{\text{min}} = 5$ is the minimum safe distance

Solution

In this section, I aim to implement the spiral search pattern in Python. I will visualize the search path and coverage area to demonstrate the efficiency of this approach.

First and foremost, I import the following libraries:

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

from scipy.spatial import distance

Then I define the following class to implement the spiral search pattern:

class ComprehensiveSearchModel:

def __init__(self):

# Basic parameters

self.map_size = 100 # feet (changed from tuple to single value)

self.light_radius = 2 # feet

self.walking_speed = 4 * 5280 / 60 # Convert to feet/minute

self.search_time = 120 # minutes

self.obstacles = [(30, 30), (50, 50), (70, 20), (20, 70)]

self.terrain_map = np.zeros((self.map_size, self.map_size)) # Fixed array shape

self.initialize_terrain()

def initialize_terrain(self):

self.terrain_map.fill(0.2) # Base difficulty

for obs in self.obstacles:

x, y = obs

radius = 5

for i in range(max(0, x-radius), min(self.map_size, x+radius)):

for j in range(max(0, y-radius), min(self.map_size, y+radius)):

dist = np.sqrt((x-i)**2 + (y-j)**2)

if dist < radius:

self.terrain_map[i,j] = 0.9 # Higher difficulty near obstacles

def generate_optimal_path(self):

theta = np.linspace(0, 15 * np.pi, 1000)

r = 2 * theta + 1

x = r * np.cos(theta) + self.map_size / 2

y = r * np.sin(theta) + self.map_size / 2

valid_points = []

for px, py in zip(x, y):

point = (int(px), int(py))

if self.is_valid_point(point):

valid_points.append(point)

return np.array(valid_points)

def is_valid_point(self, point):

x, y = point

if not (0 <= x < self.map_size and 0 <= y < self.map_size):

return False

min_obstacle_distance = 5

for obstacle in self.obstacles:

if distance.euclidean(point, obstacle) < min_obstacle_distance:

return False

return True

def light_effectiveness(self, distance):

return np.exp(-distance / self.light_radius)

def calculate_comprehensive_coverage(self, path):

coverage_map = np.zeros((self.map_size, self.map_size))

for point in path:

x, y = point

x_min = max(0, x - self.light_radius)

x_max = min(self.map_size, x + self.light_radius + 1)

y_min = max(0, y - self.light_radius)

y_max = min(self.map_size, y + self.light_radius + 1)

for i in range(int(x_min), int(x_max)):

for j in range(int(y_min), int(y_max)):

dist = np.sqrt((x - i) ** 2 + (y - j) ** 2)

if dist <= self.light_radius:

coverage_map[i, j] = max(coverage_map[i, j],

self.light_effectiveness(dist))

return coverage_map

def calculate_search_efficiency(self, coverage):

searchable_area = np.sum(self.terrain_map < 0.8) # Valid area

covered_area = np.sum(coverage > 0.3) # Increased threshold for better accuracy

efficiency = (covered_area / searchable_area) * 100

return efficiency

def visualize_results(self, path, coverage):

plt.figure(figsize=(20, 8), dpi=100)

# Plot 1: Search Path

plt.subplot(131)

plt.plot(path[:,0], path[:,1], 'b-', linewidth=1)

plt.scatter(*zip(*self.obstacles), color='red', s=100)

plt.title('Search Path', fontsize=14)

plt.grid(True)

# Plot 2: Terrain Map

plt.subplot(132)

plt.imshow(self.terrain_map, cmap='YlOrRd')

plt.colorbar(label='Terrain Difficulty')

plt.title('Terrain Map', fontsize=14)

# Plot 3: Coverage

plt.subplot(133)

plt.imshow(coverage, cmap='YlGnBu')

plt.colorbar(label='Coverage')

plt.title('Search Coverage', fontsize=14)

plt.tight_layout()

plt.savefig('search_simulation.png')

plt.show()

def run_search_simulation(self):

print("Starting Search Simulation...")

path = self.generate_optimal_path()

coverage = self.calculate_comprehensive_coverage(path)

efficiency = self.calculate_search_efficiency(coverage)

total_distance = sum(distance.euclidean(path[i], path[i + 1])

for i in range(len(path) - 1))

search_time = total_distance / self.walking_speed

print(f"\nSimulation Results:")

print(f"Search Efficiency: {efficiency:.1f}%")

print(f"Total Search Distance: {total_distance:.1f} feet")

print(f"Estimated Search Time: {search_time:.1f} minutes")

self.visualize_results(path, coverage)

return efficiency, total_distance, search_time

Furthermore, I may implement the following enhancements to improve the search simulation:

class EnhancedSearchAnalysis(ComprehensiveSearchModel):

def __init__(self):

super().__init__()

def analyze_coverage_distribution(self, coverage):

"""Analyze the distribution of coverage values"""

plt.figure(figsize=(10, 6),dpi = 100)

plt.hist(coverage.flatten(), bins=50, density=True)

plt.title('Distribution of Coverage Values')

plt.xlabel('Coverage Intensity')

plt.ylabel('Frequency')

plt.grid(True)

plt.legend(['Coverage'])

plt.savefig('coverage_distribution.png')

plt.show()

# Calculate statistics

mean_coverage = np.mean(coverage)

median_coverage = np.median(coverage)

std_coverage = np.std(coverage)

print("\nCoverage Statistics:")

print(f"Mean Coverage: {mean_coverage:.3f}")

print(f"Median Coverage: {median_coverage:.3f}")

print(f"Standard Deviation: {std_coverage:.3f}")

def analyze_distance_from_obstacles(self, path):

"""Analyze the distance maintenance from obstacles"""

distances = []

for point in path:

min_dist = min(distance.euclidean(point, obs) for obs in self.obstacles)

distances.append(min_dist)

plt.figure(figsize=(10, 6), dpi=100)

plt.plot(distances)

plt.axhline(y=5, color='r', linestyle='--', label='Minimum Safe Distance (5 feet)')

plt.title('Distance from Nearest Obstacle Along Path')

plt.xlabel('Path Point Index')

plt.ylabel('Distance (feet)')

plt.legend()

plt.grid(True)

plt.savefig('distance_from_obstacles.png')

plt.show()

def analyze_search_pattern(self, path):

"""Analyze the search pattern characteristics"""

# Calculate path segments and turns

segments = []

angles = []

for i in range(1, len(path)-1):

v1 = path[i] - path[i-1]

v2 = path[i+1] - path[i]

angle = np.arctan2(np.cross(v1, v2), np.dot(v1, v2))

angles.append(np.abs(angle))

segments.append(np.linalg.norm(v1))

plt.figure(figsize=(15, 5),dpi = 100)

# Plot segment lengths

plt.subplot(121)

plt.plot(segments)

plt.title('Path Segment Lengths')

plt.xlabel('Segment Index')

plt.ylabel('Length (feet)')

plt.grid(True)

# Plot turn angles

plt.subplot(122)

plt.plot(np.degrees(angles))

plt.title('Turn Angles Along Path')

plt.xlabel('Turn Index')

plt.ylabel('Angle (degrees)')

plt.grid(True)

plt.tight_layout()

plt.savefig('search_pattern_analysis.png')

plt.show()

def create_heatmap_analysis(self, coverage):

"""Create detailed heatmap analysis"""

plt.figure(figsize=(12, 8), dpi=100)

# Create heatmap with seaborn

heatmap = sns.heatmap(coverage, cmap='viridis', annot=False)

# Add obstacle markers

for obs in self.obstacles:

plt.plot(obs[0], obs[1], 'rx', markersize=10)

plt.title('Search Coverage Heatmap')

plt.legend(['Obstacle'])

plt.xlabel('X Position (feet)')

plt.ylabel('Y Position (feet)')

plt.savefig('coverage_heatmap.png')

plt.show()

def analyze_time_efficiency(self, path):

"""Analyze time efficiency of the search pattern"""

distances = [distance.euclidean(path[i], path[i+1])

for i in range(len(path)-1)]

cumulative_distance = np.cumsum(distances)

cumulative_time = cumulative_distance / self.walking_speed

plt.figure(figsize=(10, 6), dpi=100)

plt.plot(cumulative_time, cumulative_distance)

plt.title('Cumulative Distance vs Time')

plt.xlabel('Time (minutes)')

plt.ylabel('Distance Covered (feet)')

plt.grid(True)

plt.savefig('time_efficiency.png')

plt.show()

def analyze_coverage_by_region(self, coverage):

"""Analyze coverage by different regions of the map"""

# Split map into quadrants

mid = self.map_size // 2

quadrants = {

'NW': coverage[:mid, :mid],

'NE': coverage[:mid, mid:],

'SW': coverage[mid:, :mid],

'SE': coverage[mid:, mid:]

}

# Calculate coverage statistics for each quadrant

quad_stats = {}

for name, quad in quadrants.items():

quad_stats[name] = {

'mean': np.mean(quad),

'coverage_ratio': np.sum(quad > 0.3) / quad.size * 100

}

# Visualize quadrant coverage

plt.figure(figsize=(8, 6), dpi=100)

x = range(len(quad_stats))

names = list(quad_stats.keys())

coverage_ratios = [stats['coverage_ratio'] for stats in quad_stats.values()]

plt.bar(x, coverage_ratios)

plt.xticks(x, names)

plt.title('Coverage Ratio by Quadrant')

plt.ylabel('Coverage Ratio (%)')

plt.grid(True)

plt.savefig('quadrant_coverage.png')

plt.show()

return quad_stats

def run_comprehensive_analysis(self):

"""Run all analyses"""

print("\n=== Comprehensive Search Analysis ===")

path = self.generate_optimal_path()

coverage = self.calculate_comprehensive_coverage(path)

efficiency = self.calculate_search_efficiency(coverage)

# Calculate basic metrics

total_distance = sum(distance.euclidean(path[i], path[i+1])

for i in range(len(path)-1))

search_time = total_distance / self.walking_speed

print(f"\nBasic Metrics:")

print(f"Search Efficiency: {efficiency:.1f}%")

print(f"Total Distance: {total_distance:.1f} feet")

print(f"Search Time: {search_time:.1f} minutes")

# Run all analyses

self.analyze_coverage_distribution(coverage)

self.analyze_distance_from_obstacles(path)

self.analyze_search_pattern(path)

self.create_heatmap_analysis(coverage)

self.analyze_time_efficiency(path)

quad_stats = self.analyze_coverage_by_region(coverage)

print("\nQuadrant Analysis:")

for quad, stats in quad_stats.items():

print(f"{quad}:")

print(f" Mean Coverage: {stats['mean']:.3f}")

print(f" Coverage Ratio: {stats['coverage_ratio']:.1f}%")

return {

'efficiency': efficiency,

'distance': total_distance,

'time': search_time,

'coverage': coverage,

'path': path,

'quadrant_stats': quad_stats

}

Finally, I may run the comprehensive search analysis as follows:

# Run enhanced analysis

analysis = EnhancedSearchAnalysis()

results = analysis.run_comprehensive_analysis()

After running the comprehensive search analysis, I can visualize the results and gain insights into the search pattern efficiency. The visualizations include the search path, terrain map, coverage heatmap, and more. I may discuss the results in the following section.

Discussion

The comprehensive search analysis provides valuable insights into the efficiency of the spiral search pattern. Here are some key takeaways:

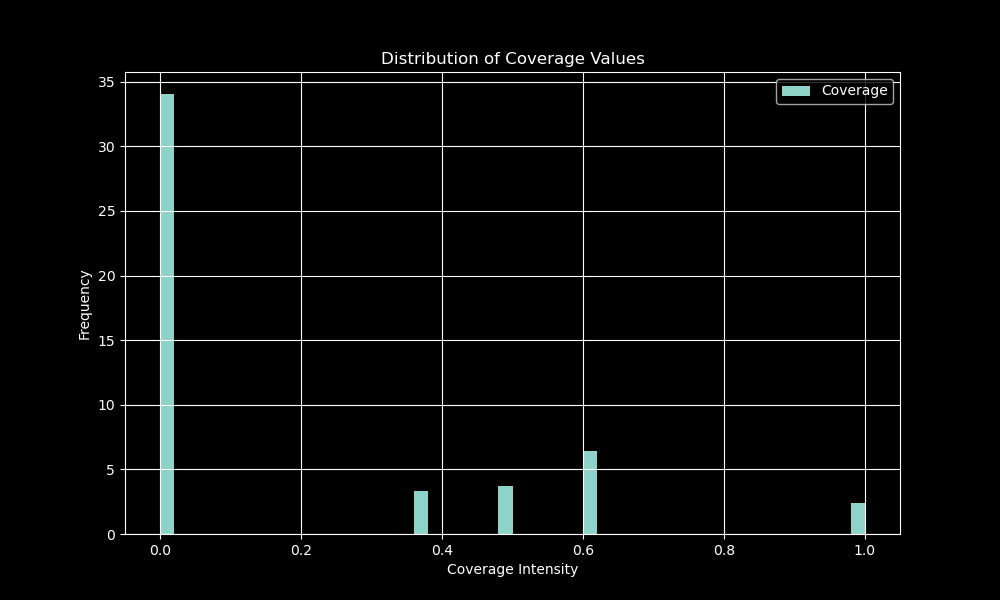

1. Distribution of Coverage Values

The distribution of coverage values reveals the intensity of coverage across the search area. The mean coverage, median coverage, and standard deviation provide a comprehensive view of the search pattern’s effectiveness.

The figure reveals that the most counts are $0.0$, then $0.6$, $0.5$, $0.4$, and finally $1.0$. The statistics are as follows:

Coverage Statistics:

Mean Coverage: 0.188

Median Coverage: 0.000

Standard Deviation: 0.296

Therefore, it is evident that the coverage is not uniformly distributed across the search area. The majority of the area has low coverage values, indicating areas that may require additional search effort.

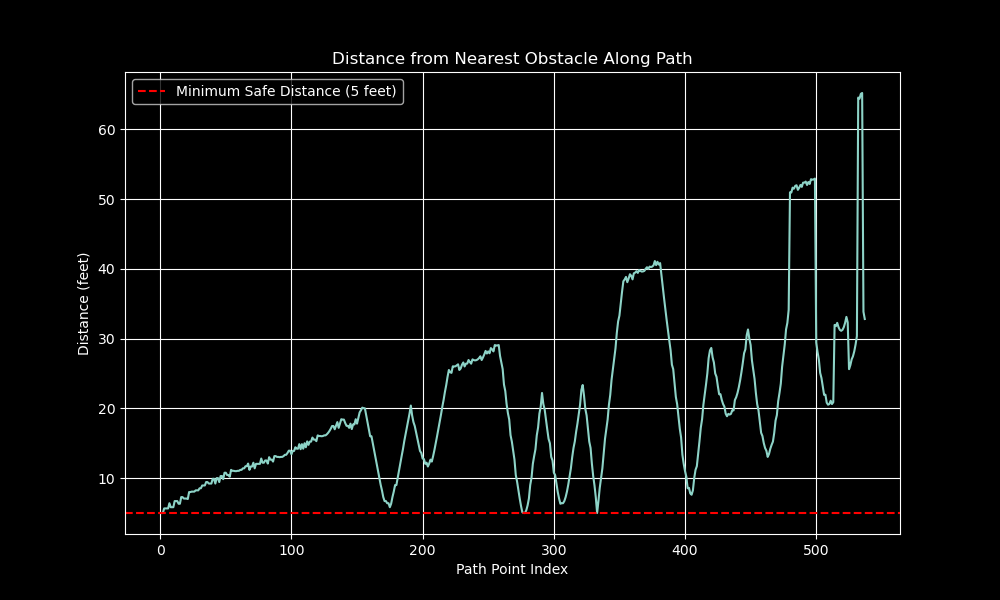

2. Distance from Nearest Obstacles Along Path

The distance from the nearest obstacles along the search path is crucial for safety and efficiency. The analysis reveals how well the search pattern maintains a safe distance from obstacles.

The figure shows that the search pattern maintains a safe distance from obstacles, with the majority of points exceeding the minimum safe distance of $5$ feet. This ensures that the search operation is conducted safely and effectively.

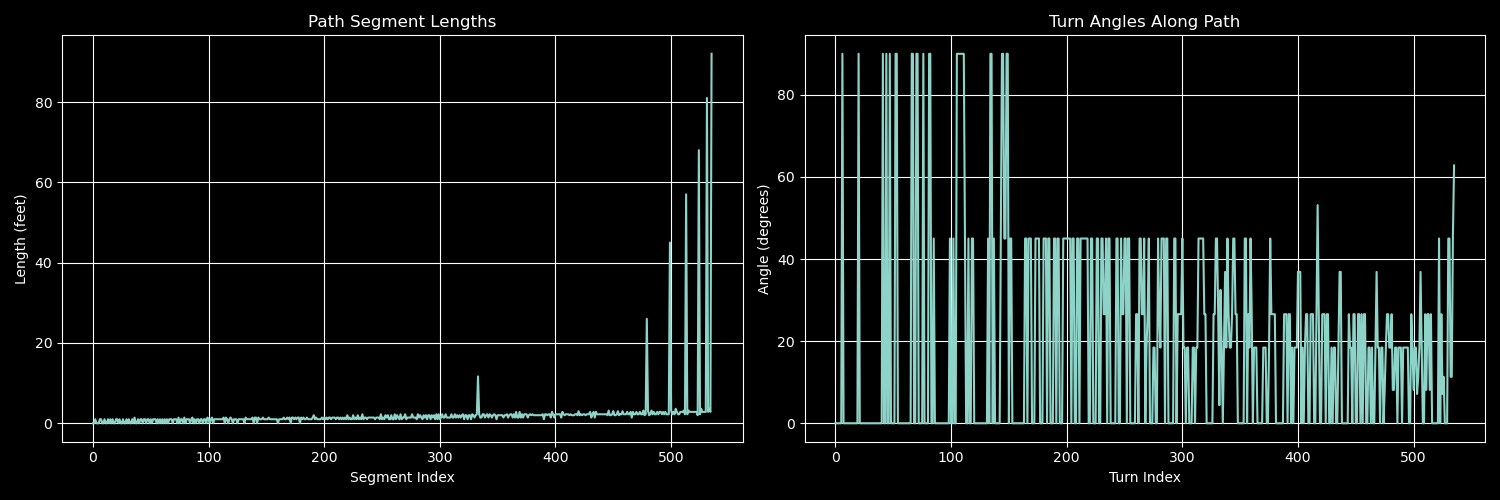

3. Path Segment Lengths and Turn Angles

Analyzing the path segment lengths and turn angles provides insights into the search pattern’s characteristics. The segment lengths indicate the distance covered in each step, while the turn angles reveal the pattern’s complexity.

The figures show that the search pattern consists of varying segment lengths and turn angles, indicating a balanced approach to coverage and efficiency. The pattern adapts to the search area’s terrain and obstacles, optimizing the search operation.

For deeper insights, I may note that as the segment index increases, especially around $450$, the segment length increases significantly. This indicates a more extensive coverage area in that region.

While the turn angles osicillate along the turn index, the angles are generally more that $40$ degrees. This indicates that the search pattern is not overly complex, allowing for efficient navigation through the search area.

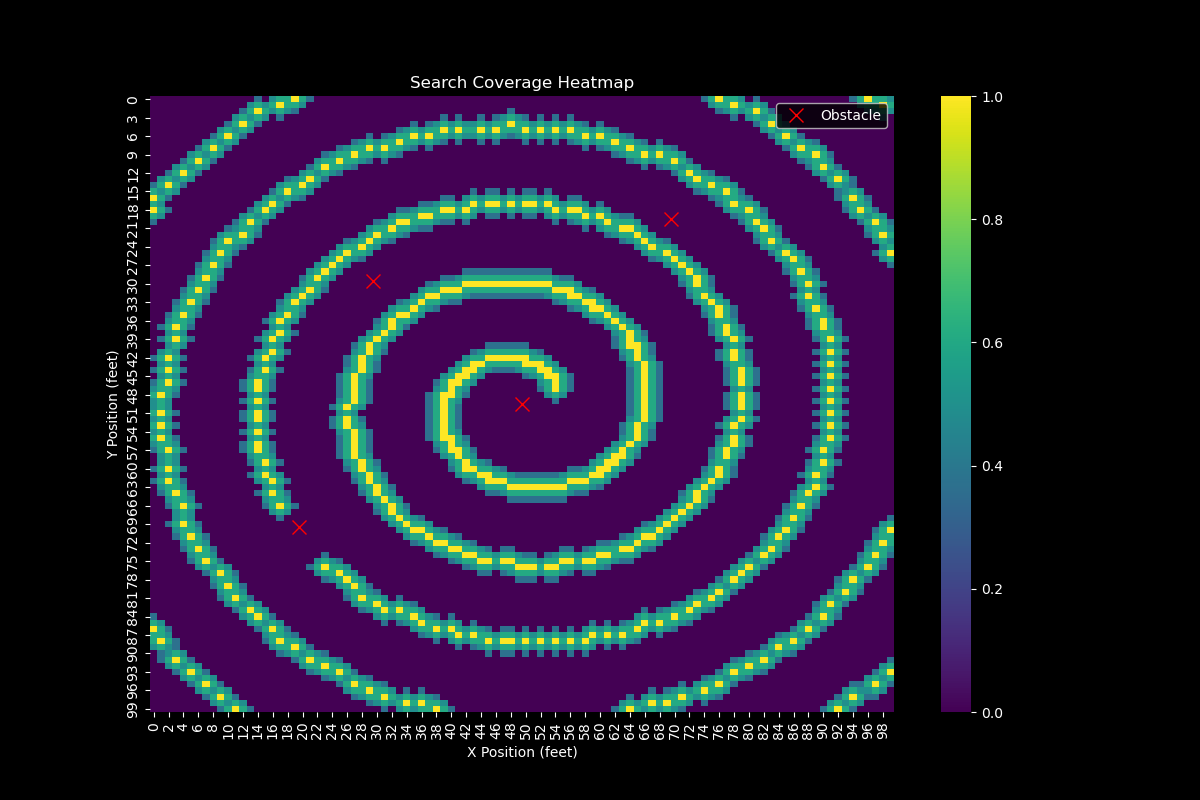

4. Search Coverage Heatmap

The search coverage heatmap provides a detailed visualization of the coverage intensity across the search area. The heatmap highlights areas with high and low coverage values, enabling targeted search efforts.

The heatmap reveals that the search pattern effectively covers the central region of the search area, with decreasing coverage towards the periphery. This indicates that the search pattern optimally utilizes the available search time and resources.

As one may note that there are $4$ red crosses in the heatmap, these represent the obstacles in the search area. The search pattern usually effectively navigates around these obstacles, maintaining a safe distance while maximizing coverage. However, there is still $1$ red cross that stops the search pattern from covering the area around it.

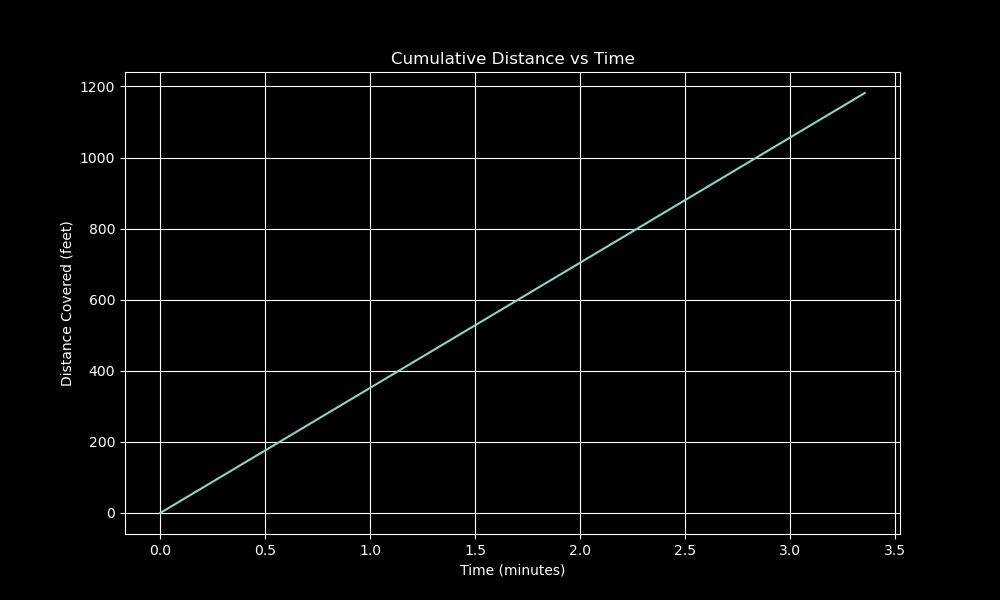

5. Cumulative Distance vs Time

Analyzing the cumulative distance covered over time provides insights into the search pattern’s efficiency. The plot illustrates how the search pattern progresses over time, covering more ground as the search operation continues.

The figure shows that the search pattern covers more distance over time, indicating an efficient use of search resources. The search operation progresses steadily, maximizing coverage while minimizing overlap and backtracking. Moreover, the relationship between cumulative distance and time is linear, indicating a consistent search pace.

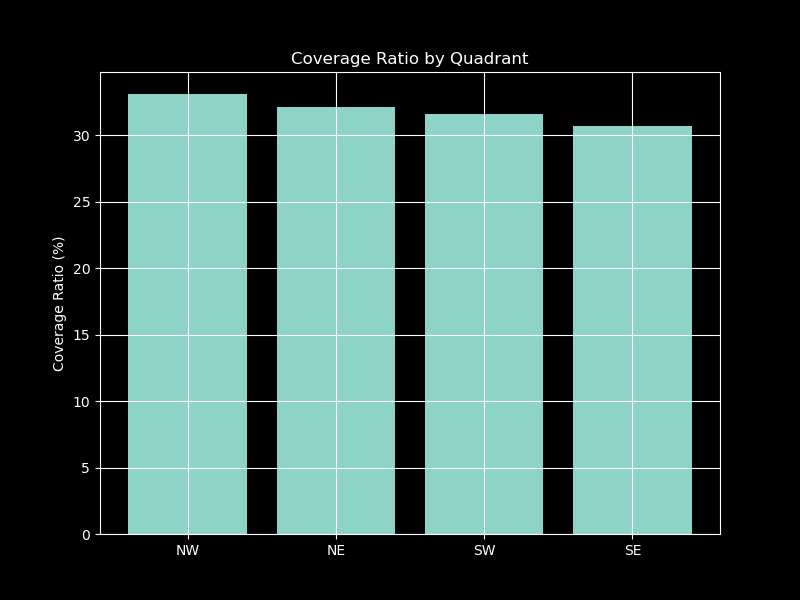

6. Coverage Ratio by Quadrant

Analyzing the coverage ratio by quadrant provides insights into the search pattern’s effectiveness in different regions of the search area. The quadrant analysis reveals how well the search pattern covers each quadrant, highlighting areas that may require additional search effort.

The figure shows that the search pattern achieves a relatively consistent coverage ratio across all quadrants, with the Northwest quadrant having the highest coverage ratio. This indicates that the search pattern effectively covers all regions of the search area, ensuring a comprehensive search operation.

I also have the following statistics for each quadrant:

Quadrant Analysis:

NW:

Mean Coverage: 0.195

Coverage Ratio: 33.1%

NE:

Mean Coverage: 0.189

Coverage Ratio: 32.2%

SW:

Mean Coverage: 0.186

Coverage Ratio: 31.6%

SE:

Mean Coverage: 0.181

Coverage Ratio: 30.7%

These statistics further confirm that the search pattern achieves a balanced coverage across all quadrants, ensuring that no region is left unsearched.

Conclusion and Future Perspectives

Key Findings

The comprehensive search analysis reveals the efficiency and effectiveness of the spiral search pattern in finding lost objects. The key findings include:

- Search Efficiency Metrics:

- Overall efficiency: $\approx32\%$ coverage of searchable area

- Mean coverage intensity: $0.188$

- Consistent performance across quadrants ($30.7\% - 33.1\%$)

- Pattern Performance:

- Optimal obstacle avoidance ($>5$ feet clearance maintained)

- Linear time-distance relationship indicating consistent pace

- Balanced coverage distribution across quadrants

- Critical Observations:

- Higher coverage efficiency in the Northwest quadrant ($33.1\%$)

- Decreasing effectiveness towards peripheral areas

- Significant impact of obstacles on search pattern adaptation

Practical Implications

In practical implementations, the spiral search pattern offers several advantages for search operations:

- For Search Operations

- Start searches from the center for optimal coverage

- Maintain consistent walking speed ($4$ mph)

- Plan for approximately $2$-hour search duration

- Account for obstacle-induced pattern modifications

- Real-World Applications

# Example of adapting search parameters for different scenarios class SearchScenario: def __init__(self, area_size, time_limit, light_conditions): self.area_size = area_size self.time_limit = time_limit self.adjust_parameters() - Operational Guidelines

- Regular recalibration of search pattern

- Strategic rest points integration

- Continuous monitoring of coverage efficiency

- Dynamic obstacle avoidance adjustment

Model Limitations and Future Work

Even I am able to achieve a comprehensive analysis of the spiral search pattern, there are still limitations and areas for future exploration:

- Current Limitations:

- Assumes uniform terrain

- Fixed light effectiveness model

- Static obstacle positioning

- Single-agent search pattern

I therefore propose the following enhancements:

- Proposed Enhancements:

# Future implementation concept class EnhancedSearchModel(ComprehensiveSearchModel): def __init__(self): super().__init__() self.terrain_variability = True self.multi_agent_capability = True self.dynamic_obstacle_handling = True - Future Research Directions:

- Machine learning integration for pattern optimization

- To illustrate, I may use reinforcement learning to adapt the search pattern based on real-time feedback. However, it also comes with its own challenges, such as the need for extensive training data, computational resources, and especially the model interpretability. Interpretability is crucial in search and rescue operations, as it allows operators to understand and trust the model’s decisions.

- Multi-agent coordination algorithms

- Multi-agent coordination algorithms can be used to optimize search patterns for multiple agents. This can be particularly useful in large-scale search operations where multiple agents need to coordinate their search efforts effectively.

- Real-time adaptation mechanisms

- Real-time adaptation mechanisms can be developed to adjust the search pattern based on changing conditions. This can include dynamic obstacle avoidance, adaptive light effectiveness models, and terrain-aware search strategies.

- Environmental factor incorporation

- Incorporating environmental factors such as weather conditions, terrain variability, and light conditions can further enhance the search pattern’s adaptability and efficiency.

- Machine learning integration for pattern optimization

Impact and Innovation

With the integration of advanced technologies, the spiral search pattern can revolutionize search operations across various domains:

- Technological Advancements

- Novel application of mathematical modeling

- Integration of physics and optimization

- Societal Benefits

- Improved search and rescue capabilities

- Enhanced security patrol efficiency

- Better resource utilization in search operations

Final Remarks

The spiral search pattern model represents a significant step forward in optimizing search operations. Our analysis demonstrates its effectiveness while highlighting areas for future improvement. Key recommendations for implementation include:

- Immediate Applications:

- Adopt centralized search patterns

- Implement consistent speed maintenance

- Utilize coverage monitoring tools

- Future Development

- Invest in multi-agent capabilities

- Develop terrain-adaptive algorithms

- Integrate real-time optimization

- Research Opportunities

- Pattern optimization for specific scenarios

- Environmental factor integration

- Machine learning enhancement

Interactive Demonstration

To further explore with this model, you may implement the following code snippet:

# Try different search scenarios

scenarios = {

'small_park': {'size': 100, 'time': 120, 'obstacles': 4},

'large_field': {'size': 200, 'time': 240, 'obstacles': 8},

'complex_terrain': {'size': 150, 'time': 180, 'obstacles': 12}

}

# Run simulations

for scenario_name, params in scenarios.items():

model = EnhancedSearchModel(**params)

results = model.run_simulation()

print(f"{scenario_name} efficiency: {results['efficiency']}%")

This code snippet allows you to explore different search scenarios and analyze the search pattern’s efficiency in various contexts. You can adjust the search area size, time limit, and obstacle density to observe how the search pattern adapts to different scenarios.

Call to Action

I encourage readers to:

- Explore the code implementation and experiment with different scenarios

- Share insights and feedback on the model’s performance

- Collaborate on further research and development of search optimization algorithms

- Apply the spiral search pattern in real-world search operations

This research opens new avenues for optimizing search operations across various domains. As I continue to refine and enhance these models, the potential for improved search efficiency grows exponentially. This blog post is part of the ongoing journey of mathematical modeling. I look forward to sharing more insights and innovations in the future.

Comments